Discover how a Canadian family achieved 239% returns using strategic...

Read MoreHow AI's Demand Signals the End of the Chip Era

Nvidia's Recent Plunge and DeepSeek Have No Connection

This past weekend, the AI company DeepSeek suddenly became a global sensation, causing Nvidia to plummet 17%, with news attributing the cause to DeepSeek.

AiF believes this is entirely misleading.

In fact, AiF has been warning for the past 5 years that the semiconductor industry is a sunset industry, and we do not recommend investing in it.

Today’s sharp decline in Nvidia is simply the beginning of the bursting of an enormous bubble that has built up over the past two years. Institutions and traders found an excuse to offload their holdings, and DeepSeek is irrelevant.

AiF insists on looking beyond the surface to understand the essence, helping clients avoid risks fundamentally, thereby achieving long-term, sustainable profits.

The recent boom in the artificial intelligence industry has attracted widespread attention to the chip industry. Today, we’ll delve deeper into this compelling topic, discussing the need for AI in the chip industry, the limitations of Moore’s Law, and the possibility that AI could become the end of the chip industry.

The content of this blog is divided into the following four parts

AI's computing power has reached a critical bottleneck

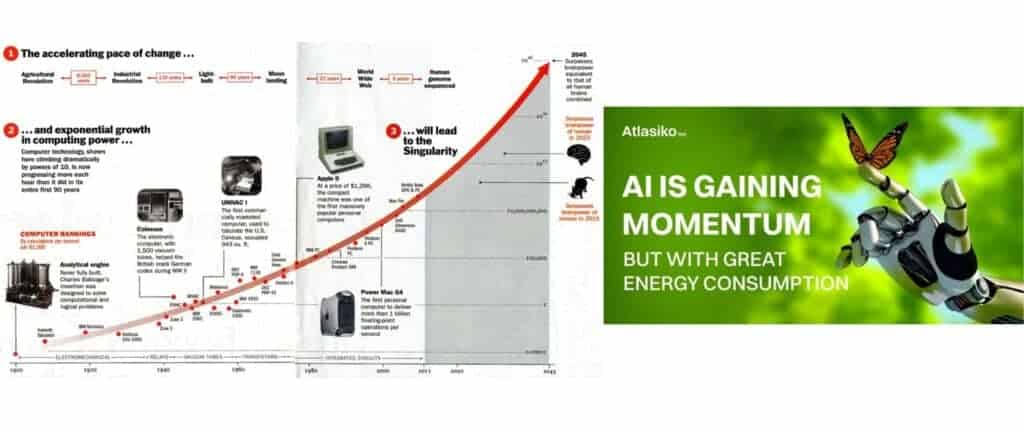

First, let’s review the development history of artificial intelligence. 2022 has been declared as the “Year of AI”, marking the official arrival of the artificial intelligence era. The realization of artificial intelligence relies on the improvement of computing power. From the initial CPU to the later GPU, TPU and NPU, it continues to drive the development of the chip industry. Due to the rapid development of artificial intelligence, the stock prices of companies such as Nvidia have skyrocketed, becoming the second largest company by market value.

- CPU is Central Processing Unit (Central Processing Unit)

- GPU is Graphics Processing Unit

- TPU is Google’s Tensor Processing Unit

- NPU stands for Neural Network Processing Unit

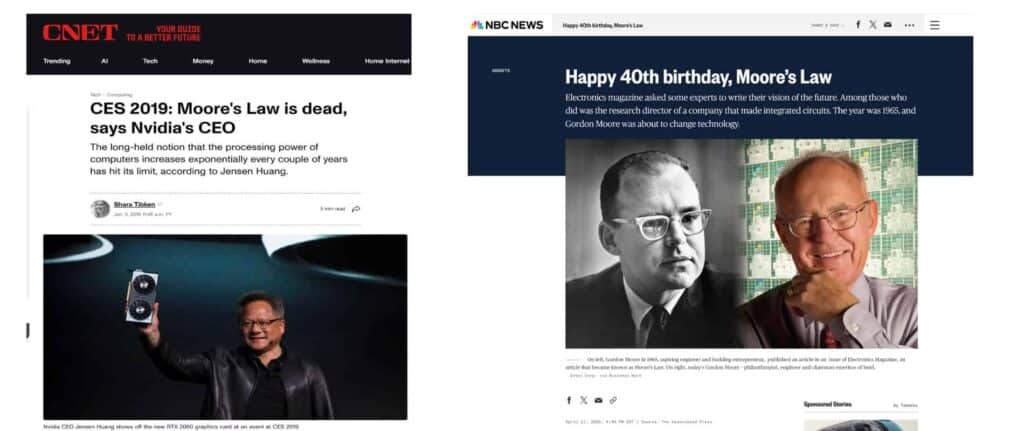

However, with the continuous development of technology, the limitations of Moore’s Law are gradually exposed. Moore’s Law has been a driving force for technological innovation and social change since the late 20th century (FAGA (four well-known companies in the US technology industry: Facebook, Amazon, Google, and Apple) have built business models based on Moore’s insights.), but As the number of transistors increases, power consumption increases, resulting in a decrease in efficiency. CEO Jen-Hsun Huang and others have long pointed out that Moore’s Law is outdated, and Moore himself admitted the physical limits it faces in a 2005 interview.

So, can Moore’s Law truly continue to evolve indefinitely?

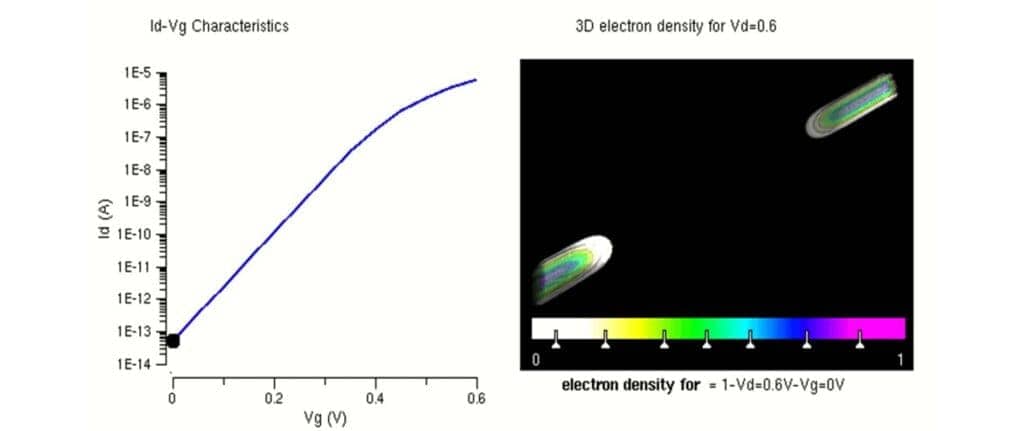

The advancement of process nodes has driven the continuous realization of Moore’s Law. During the early improvements from 3µm to 0.13µm processes, Dennard Scaling was effective. However, after 0.13µm, Dennard Scaling gradually became ineffective. The primary reason is that as transistors became smaller and more numerous within the same chip size, current leakage in the channel region caused transistors to heat up, leading to increased chip power consumption. This broke the simple linear scaling relationship between transistor count, computational speed, and energy consumption.

In the current situation, this means CPU manufacturers can no longer rely on simply increasing clock frequencies and core counts to boost computational power. Significant increases in overall power consumption would only result in a portion of the cores operating under ideal conditions.

The industry has conducted extensive research and experimentation in an attempt to extend the life of Moore’s Law. However, none of these efforts have been able to fundamentally address the core issue facing Moore’s Law: overheating, which reduces efficiency.

In 2023, the passing of Moore’s Law’s creator, Gordon Moore, was seen as the end of an era for the chip industry. With technological advancements, we may need to abandon our reliance on chips and explore new technological paths.

The insurmountable physical limits have led to a bottleneck in humanity’s technological progress. To break free from this bottleneck, abandoning the path of chips seems to be an inevitable outcome.

Thus, one could say: the chip industry is already a sunset industry.

Beyond technological changes, collaboration and innovation within and outside the industry are also worth attention. For example, OpenAI CEO Sam Altman took a nuclear energy company public to help advance artificial intelligence. This kind of cross-industry collaboration and innovation may represent a new direction for future technological development.

When we consider the impact of artificial intelligence (AI) on the chip industry, we must also take data center demands into account. AI training requires massive data processing and storage, which drives an ever-increasing demand for high-performance chips and high-speed networks. Cloud giants such as Google, Amazon, and Microsoft are all investing in new data center architectures to meet AI needs. Google’s TPU chips have already become a part of the cloud computing market, delivering performance in machine learning tasks that surpass traditional CPUs and GPUs.

Additionally, AI has also driven the growth of edge computing. Edge computing moves data processing and analysis closer to the data source, reducing latency and improving efficiency. This has increased the demand for low-power, high-performance chips capable of running complex AI algorithms on edge devices. As a result, chip manufacturers and tech companies are working on developing new chip architectures tailored to edge computing to meet this emerging market’s needs.

Another trend worth noting is the diversification of AI hardware. In the past, GPUs were widely used to train deep learning models. However, as AI continues to develop, custom chips designed for specific tasks are emerging. For instance, Google’s TPUs are specifically designed to accelerate neural network inference, while Intel’s Nervana chips focus on deep learning training tasks. These customized hardware designs have significantly improved AI application performance while driving innovation in the chip industry.

Overall, the rapid development of AI has had a profound impact on the chip industry. As technology continues to advance, we can foresee that AI will place ever-growing demands on chip design, manufacturing, and application. Chip manufacturers and tech companies will need to innovate continually to meet the needs of the AI era, bringing both new opportunities and challenges to the chip industry.

AI-powered demand is virtually limitless

AI’s demand for chips is leading it to become the very thing that could end the chip industry. At the core of this trend lies AI’s enormous power consumption, making it an energy-hungry monster. For example, training an AI model like ChatGPT requires 1,300 megawatt-hours of electricity per day. This number might seem abstract to most people, but in practical terms, that much electricity could power online video streaming for 185 years, highlighting the massive energy demand of AI.

With the rapid development of AI, the electricity consumption of AI data centers across the U.S. has already reached 2.5% of the nation’s total electricity usage. What makes this figure shocking is that it’s enough to power the entire city of New York. From 2020 to 2022, global data center electricity consumption grew from 200–250 TWh to 460 TWh, accounting for 2% of global electricity usage. Even more concerning is the rapid growth rate, increasing by 25% to 33% annually, and projected to reach 1,000 TWh by 2026, equivalent to the electricity consumption of the entire country of Japan.

In addition to the power consumed by IT equipment itself, the cooling systems required to maintain these devices also demand a significant amount of electricity. If the electricity consumption of IT equipment is represented as 1, the cooling systems consume an additional 0.4, bringing the total to 1.4. This means that the total energy consumption of a data center includes not just the electricity used by IT equipment, but also the substantial energy required to keep the systems operating at safe temperatures.

As a result, a fierce “power grab” is underway among major corporations worldwide. This is one of the reasons why OpenAI has assisted the nuclear power company Oklo in going public. Traditional power supplies can no longer meet AI’s massive energy demands, while nuclear power holds the potential to be a viable solution. However, building nuclear power infrastructure takes time, and one of the biggest limiting factors for AI’s development today is the insufficient supply of electricity. By helping nuclear power companies go public, OpenAI aims to promote the expansion of energy infrastructure to meet the ever-growing energy demands of AI.

Key Characteristics of the Chip's Successor

Let’s take a look at the features and traits that the “chip terminator” should possess.

As AI’s demand for chips—and more specifically, computational power—continues to grow, the existing chips are no longer able to meet the needs of AI development. This has effectively made chips an obstacle to AI’s progress. In the past, the continuous advancement of Moore’s Law drove improvements in chip performance. However, over time, maintaining this law has become increasingly difficult, and nanotechnology is approaching its limits. As a result, modern chips face a bottleneck and can no longer develop according to the expectations of Moore’s Law, pushing chips toward obsolescence.

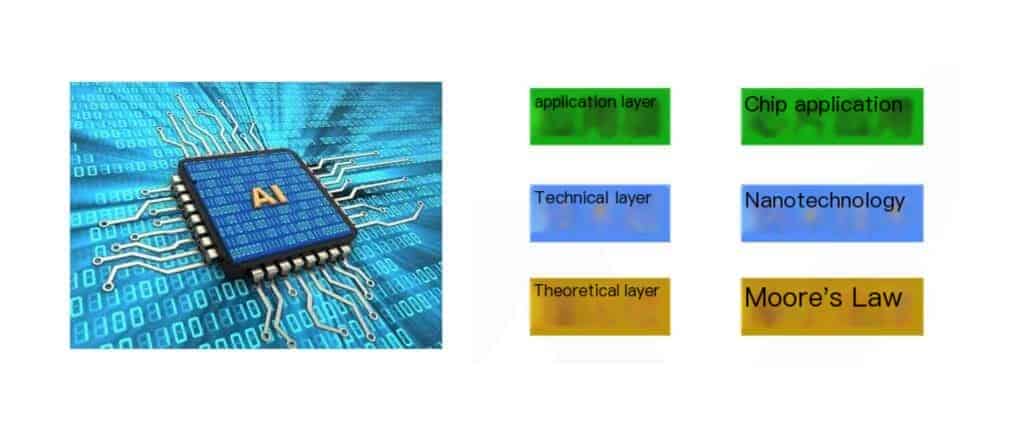

To address this issue, we need new technologies to replace existing chips. In this process, we must consider the roles of the theoretical, technical, and application layers. Moore’s Law represents the theoretical layer, providing the foundation for the development of the chip industry. Nanotechnology represents the technical layer, extending Moore’s Law through advancements in manufacturing processes. However, as Moore’s Law becomes unachievable and nanotechnology reaches its limits, the application of chips has also come to an end.

At this stage, the chip industry is already nearing its conclusion. Every industry inevitably goes through phases of theoretical, technical, and application development, and this phased progression is unavoidable.

For this reason, we cannot simply focus on the superficial aspects. For example, chip manufacturers that are currently popular in the market, such as Nvidia, may just be taking advantage of the current technology before it is completely replaced to maximize profits. Take Intel as an example. As a leader in the semiconductor industry, it continually sells patents and transfers nanotechnology, not because Intel is foolish, but because it realizes that the development of semiconductor technology is approaching a bottleneck and must shift to new technological fields.

In this process of transformation, solving power consumption and heat dissipation issues becomes particularly important. Existing chips generate a large amount of heat during high-performance computing, requiring a significant amount of power for cooling. This not only wastes resources but also limits further performance improvements. Therefore, the future “chip terminators” must be able to solve these problems by using new materials, structures, or technological methods to reduce power consumption and improve heat dissipation efficiency.

Overall, although the “chip terminator” has not yet appeared, we have already seen the inevitable trend in technological development. Similar to the past development of computers, AI will undergo a transformation from large and bulky to miniaturized and efficient. This process may be even faster than the development of computers. Therefore, we need to closely monitor technological advancements and prepare for future innovations.

AI Financials' Investment Strategy for 2025

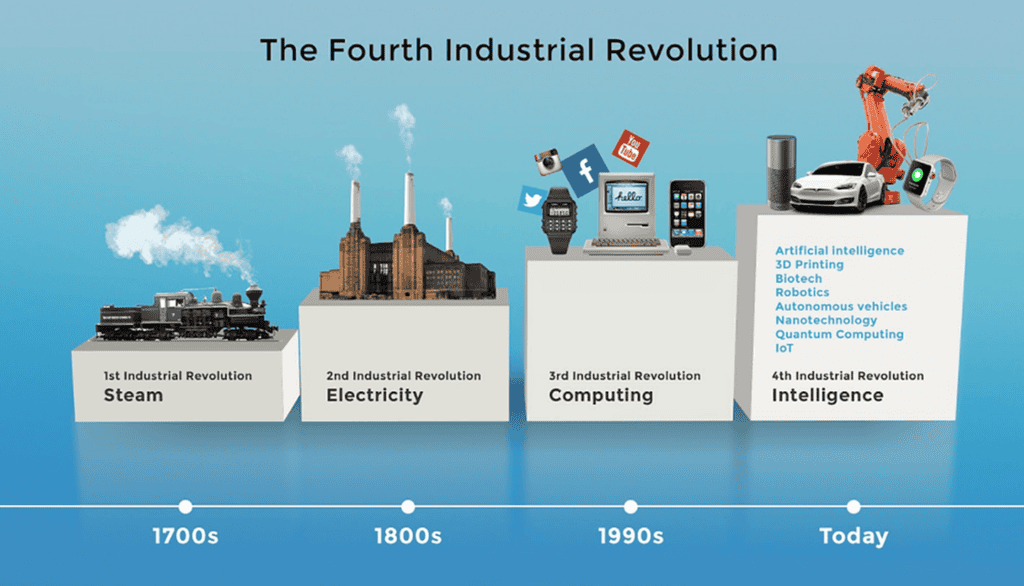

The Fourth Industrial Revolution, driven by AI, big data, IoT, and cloud computing, is accelerating technological and wealth growth. To succeed, it’s crucial to invest in the right direction, as missteps can lead to significant losses.

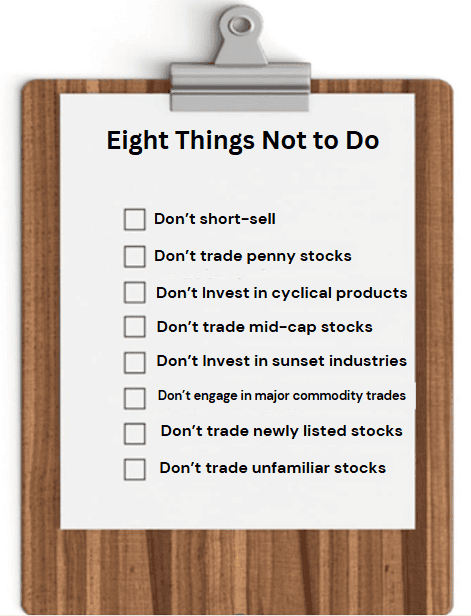

The key to successful investing is avoiding sunset industries, such as the chip sector, and prioritizing strategies that ensure capital protection. The chip industry, as a sunset sector, carries high risks and is unsuitable for long-term investment, while artificial intelligence represents a thriving sunrise industry with significant potential. Stock investments are essentially a game of probabilities, where speculation on individual stocks can lead to losses.

The correct approach is to invest in Segregated Funds, which offer capital protection and diversification by holding a basket of stocks. Even if some stocks underperform, the overall portfolio remains secure. Over the past two years, our Segregated Funds have successfully captured Nvidia’s (NVDR) rise, demonstrating the advantages of this investment tool. By using investment loans to purchase Segregated Funds, investors can benefit from stock market gains while maintaining a balanced and secure asset allocation, ensuring steady wealth growth over the long term.

If you buy stocks on your own, especially individual stocks, you’re essentially betting on probabilities. We’ve repeatedly told everyone that buying individual stocks is about gambling on probabilities, not investing. Speculation might yield gains, but it can also result in total losses. You must invest, not speculate. If you don’t want to speculate, don’t bet on probabilities.

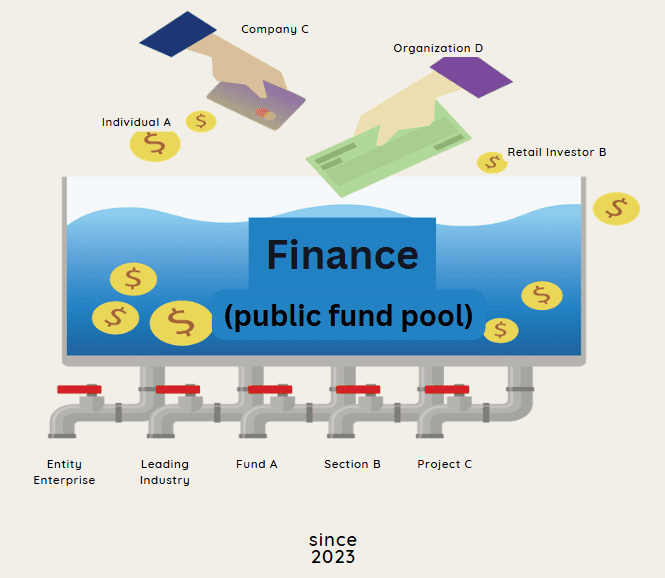

Avoiding probabilities means you shouldn’t buy individual stocks. Then what should you buy? The correct approach is to invest in public capital-preservation funds. That’s the way to go because these funds invest in a basket of stocks. If one stock doesn’t perform well, another stock in the portfolio will, ensuring overall growth and profits.

By investing in capital-preservation funds, you both diversify your risk and ensure you capture profits. That’s why we keep emphasizing that buying public capital-preservation funds is the best approach. Don’t trade individual stocks thinking you’re smart enough to beat the market. And don’t invest in indices, index funds, or ETFs.

Our earlier data from Google and Goldman Sachs shows that during the past few years, when the market performed well, index funds and ETFs also gained. However, their growth rate and magnitude were lower compared to the capital-preservation funds we invested in. The facts speak for themselves.

Work with professionals to invest in public capital-preservation funds. Especially with our capital-preservation funds, you can even borrow money to invest. This allows you to “borrow chickens to lay eggs.” Banks can’t offer this feature. If you go to a bank, you won’t be able to get an investment loan to buy capital-preservation funds.

If you want to borrow money to invest—using an investment loan—you’ll need to work with licensed and certified professionals like us at AI Financial. Only we can help you borrow money for investment purposes; banks cannot. Don’t believe us? Feel free to ask any bank if they can provide an investment loan for buying capital-preservation funds.

Only we can do it. So, it’s critical that you understand this and find professionals to help you invest. This approach will truly save you effort while maximizing your profits.

The Best Time to Start Is Now

Recent Posts

You may also interested in

Canadian Soldier Achieves 204% ROI with Investment Loan and Segregated Fund| AiF Clients

Zack, a Canadian soldier in his 40s, turned limited savings...

Read MoreFrom $100K to $520K: How a Millennial Actuary Couple Achieved a 154% Leveraged Return| AiF Clients

Discover how a millennial actuary couple used investment loans and...

Read MoreCan Non-Residents Invest in Segregated Funds in Canada?Hazel’s Journey with Ai Financial| AiF Clients

Hazel, a non-resident mother in Canada, invested CAD $200,000 across...

Read More